Risk-based Assessment of Generative AI: Is ChatGPT Secure-by-Design?

Lalit Ahluwalia is committed to redefine the future of Cybersecurity by adding a “T-Trust” tenet to the conventional CIA Triad. Here, Lalit explores the cybersecurity risks associated with the use of OpenAI’s ChatGPT and associated generative AI models.

Generative AI systems have spurred a revolution that holds the potential to reshape industries, enhance productivity, and streamline processes. Among these groundbreaking innovations, ChatGPT, developed by OpenAI, has emerged as a leading generative AI model that boasts capabilities to generate coherent and contextually relevant text, transforming the way humans interact with machines.

However, as this new frontier unfolds, the pressing question lingers: Is ChatGPT truly secure-by-design?

Here, we will take a closer look at generative AI systems, highlight why risk-based assessment of AI is important, and also explore the potential cybersecurity concerns associated with ChatGPT use from a “security-by-design” standpoint.

The Generative AI Boom: Transforming Interactions

Generative AI systems encompass a class of AI models that possess the extraordinary machine learning ability to generate content, including text, images, and even music, that mirrors human creativity. These systems, fueled by “neural” networks, analyze vast amounts of data to learn patterns and produce output that is indistinguishable from human-generated content.

Among them, ChatGPT has gained prominence due to its natural language processing prowess, which allows it to engage in text-based conversations that are often indistinguishable from interactions with humans.

Open AI’s Take on Data Privacy

OpenAI, cognizant of the concerns around data privacy, has implemented stringent data privacy regulations and provided comprehensive Frequently Asked Questions (FAQs) to address user concerns.

These measures are aimed at alleviating the worries of individuals who interact with ChatGPT. However, the central question remains: Does ChatGPT inadvertently “leak” personal information during its interactions?

Open AI makes it clear:

“We do not sell your data or share your content with third parties for marketing purposes.” Source: Data usage for consumer services FAQ

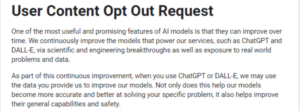

Concerned users can also opt out of ChatGPT’s data collection mechanism to mitigate potential privacy concerns. This opt-out feature restricts OpenAI from using the interaction data for improving the model, providing a layer of reassurance for those who are wary of sharing their conversations. To opt out of ChatGPT data collection feature, please fill OpenAI’s Opt Out Request Form.

Why Risk-Based Assessment of Generative AI?

The meteoric rise of generative AI systems has propelled the need for rigorous risk-based assessment. With innovation comes responsibility, and while the potential applications of these systems are limitless, so are the associated risks.

The allure of automating content generation and communication needs to be tempered with a comprehensive understanding of the vulnerabilities that come with it.

Security by Design: A Closer Look

ChatGPT, while revolutionary, is not immune to cybersecurity vulnerabilities. It is crucial to acknowledge that, like any technology, there is no infallible system. The cybersecurity risks associated with ChatGPT usage are real and multifaceted.

From the potential to generate misleading or harmful content to exposing sensitive information during conversations, the risks cannot be ignored.

Exploiting ChatGPT: A Hacker’s Playground?

The cybersecurity landscape takes a darker turn when considering how hackers can exploit ChatGPT. By manipulating conversations, hackers can craft socially engineered messages to deceive and manipulate individuals, leading to potential data breaches or even financial losses.

The ease with which ChatGPT generates human-like text is a double-edged sword, capable of either assisting legitimate users or aiding malicious actors.

ChatGPT and Social Engineering Attacks

Generative AI systems like ChatGPT hold the potential to become powerful tools in social engineering attacks. By imitating trusted individuals or entities, attackers can manipulate victims into divulging sensitive information, installing malware, or performing other malicious actions. This raises a critical question about the role of technology in exacerbating social engineering vulnerabilities.

As AI models evolve and become more sophisticated, the emergence of AI-GPT4 models introduces the unnerving possibility of their use in crafting or modifying various forms of malware. These models could potentially generate malicious code, exploit vulnerabilities, or craft persuasive phishing messages that evade detection by traditional security measures.

Conclusion

As the generative AI revolution continues to unfold, it is clear that while ChatGPT and its counterparts offer immense potential, they also pose significant cybersecurity challenges. The rise of generative AI systems has illuminated the path to a future where interactions between humans and machines are redefined.

While the road ahead might be uncertain, one thing is clear: only through a combination of foresight, collaboration, and innovation can we hope to unlock the true potential of ChatGPT while fortifying our digital landscape against the looming threats.